Engineering Language Intelligence: Building the Brains Behind Modern AI

This article offers a comprehensive look at how engineers and researchers build Large Language Models (LLMs)—the foundational technology powering today’s most advanced AI systems.

Introduction

Large Language Models are the engines behind the AI boom. From chatbots to content generation tools and autonomous agents, these models are transforming how we write, learn, communicate, and build. But behind their seemingly effortless outputs lies a complex system of engineering choices and computational challenges.

This article explores the full engineering workflow behind LLMsfrom the moment raw text is collected to the point where a machine can generate meaningful, coherent language. Along the way, well explore the key technologies, trade-offs, and innovations that make this possible.

1. Engineering the Input: Data Collection and Preparation

Every model starts with dataand in the case of LLMs, a lot of it. Engineers collect text from diverse sources including:

-

Public domain books and articles

-

Websites, forums, and technical documentation

-

Academic journals and open-source code

-

Dialogue transcripts and instructional data

Engineering tasks include:

-

Filtering out noise (spam, broken text, offensive content)

-

Normalizing formats (e.g., HTML stripping, case folding)

-

Tokenizing text into numerical sequences

-

Balancing domains to avoid over-representation of any topic or style

These steps form the bedrock of the models eventual capabilities. Garbage in, garbage out.

2. Designing the Brain: Transformer Architecture

The transformer architecture, introduced in 2017, is the backbone of nearly every major LLM. It enables models to process language not sequentially, but in parallelgreatly increasing efficiency and capacity.

Key components:

-

Self-attention layers that allow the model to weigh relationships between words

-

Feed-forward networks that build deeper semantic understanding

-

Positional encoding so the model can learn word order

-

Residual connections for gradient stability in deep networks

Engineers optimize these architectures by scaling up:

-

Model depth (number of layers)

-

Width (number of attention heads and neurons per layer)

-

Context window (how much text the model can process at once)

Bigger models tend to perform betterbut they also bring challenges in training and deployment.

3. Training the Model: Scale, Compute, and Strategy

Training an LLM involves adjusting billions (or trillions) of parameters to minimize prediction error across massive datasets.

Steps in the training pipeline:

-

Forward pass: The model makes a prediction for the next token.

-

Loss calculation: Compare the prediction to the actual result.

-

Backward pass: Use gradients to update weights.

-

Repeat billions of times.

This process is conducted on massive clusters of GPUs or TPUs, using parallel processing strategies like:

-

Data parallelism (splitting data across machines)

-

Model parallelism (splitting the model across machines)

-

Pipeline parallelism (splitting layers across machines)

Frameworks like DeepSpeed and Megatron-LM make these systems feasible at enterprise scale.

4. Post-Training Refinement: Making Models Useful

Raw pretrained models are powerful but unrefined. Engineers fine-tune them for usefulness, safety, and specificity.

Refinement techniques:

-

Instruction tuning: Teach the model to follow human instructions using labeled prompt-response pairs.

-

RLHF (Reinforcement Learning with Human Feedback): Collect human preferences, train a reward model, and use it to guide outputs.

-

Few-shot and zero-shot learning: Evaluate how well the model generalizes to new tasks with little to no additional training.

These steps shape the models tone, reasoning style, and task-following abilitycrucial for real-world use cases.

5. Safety and Alignment: Guardrails for Intelligence

As LLMs become more capable, they also carry more risk. Engineers must align models with human values and safety protocols.

Safety engineering practices include:

-

Red-teaming: Simulating attacks or harmful prompts to test model resilience

-

Bias audits: Evaluating model behavior across demographic and cultural lines

-

Content filters: Blocking inappropriate or sensitive responses

-

Model cards and transparency reports: Documenting known limitations and behavior

The goal is not just to make the model smart, but safe, reliable, and controllable.

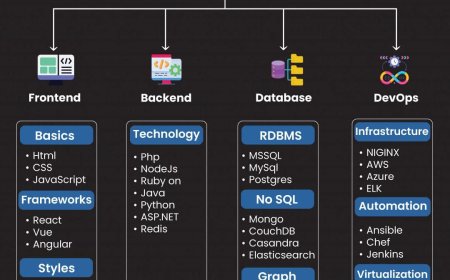

6. Deployment Engineering: Bringing Models to Life

Once the model is tuned and tested, its ready for deployment. Engineers must now solve new challenges:

-

Latency and speed: Optimize model inference time

-

Cost efficiency: Use model compression, distillation, or caching

-

Scalability: Handle millions of simultaneous user interactions

-

Monitoring and feedback: Detect failures, toxicity, or hallucinations in production

Deployment strategies include:

-

Cloud-based APIs

-

On-device inference (for smaller models)

-

Hybrid setups with retrieval or search augmentation

This is where AI becomes a productembedded in apps, services, and enterprise platforms.

7. The Engineering Horizon: What Comes Next

The field is moving fast. Some trends shaping the future of LLM engineering include:

-

Multimodal systems that combine language with vision, audio, or video

-

Long-context models that can process books, documents, or memory histories

-

Agentic models that take actions, call tools, and self-improve

-

Personalized AI tailored to individuals and contexts

Engineers are also exploring open-weight models, efficient architecture search, and self-healing safety layers to make AI more accessible and robust.

Conclusion

The development of Large Language Models is not just a scientific achievementits an engineering triumph. It involves designing neural architectures, scaling computation, aligning values, and deploying intelligent systems at global scale.

As AI continues to reshape how we live and work, the engineers behind these models are not just building softwaretheyre crafting the future of human-computer interaction.